Claim Audit Platform

Transforming the end-to-end claim audit experience

Industry / Market Sector

Health care, insurance, payer, enterprise, B2B

Challenge

Claim reviewers at Optum were using eight legacy platforms and over a hundred separate tools, supporting apps, and macros to perform claim audits. The overhead of switching and the cost of maintaining and updating the platforms were high. The Claim Audit Platform (CAP) was created to consolidate the legacy platforms and transform the end-to-end claim audit experience to reduce the cost of ownership and improve claim audit efficiency. The platform needed to support multiple payer tenants, and many user roles, including internal claim reviewers, SMEs, analysts, and managers, as well as external commercial payer stakeholders and providers.

As a five-year capital project, CAP was a huge undertaking that involved 16 scrum teams and hundreds of stakeholders working across five time zones. I was brought in at the beginning of year two to manage the larger Payment Integrity UX portfolio at Optum and joined the project to lead the UX team of 8 designers, researchers, and accessibility engineers. As I dove into the complex world of health care claim auditing and optimizing the platform for our users, I first had to work out some issues with the project work environment.

My role

UX lead, UX portfolio manager, design, user research, UX strategy, project staffing.

In collaboration with

Fully remote project team located across three countries spanning five time zones, including program leadership, SMEs, operations, engineering, product, PMO, designers, accessibility engineers, and researchers.

Process issues

The first thing I did when I took over the project was to meet with UX team members and key stakeholders one-on-one. Besides forging relationships, I wanted to identify what was working and what wasn’t, and the pain points the team was facing. At the top of the list among stakeholders was the perception that UX was a bottleneck. Stakeholders felt that UX took too long, sometimes months, to turn around designs. This was negatively impacting the development schedule and the project velocity. Besides improving the turnaround, stakeholders wanted better visibility into the design progress and expected design completion.

Among the UX team, designers and researchers felt that they were never given enough time to thoroughly work on a design. They relayed that sometimes, engineering asked for a design just days before the start of implementation. Team members were unhappy that they weren’t given the time to properly implement a user-centered design process. They were worried that many solutions were being driven by how easy it was to develop and the speed with which we could get the feature out the door. There was an underlying concern that the team wouldn’t ever have the chance to make improvements to address user pain points and meet program goals.

Stakeholder and UX team member perceptions seem to be the polar opposite of each other, and I knew there was more to each side. In digging deeper, I found that the UX team didn’t have insight into the development schedule. Engineering and other stakeholders thought they had handed off a feature for design by the nature of having brought up the feature in a discussion. UX was being looped into many requirements, operations, and engineering conversations, and was unclear on which features were considered pipeline features and which had already been slotted for an upcoming release. There wasn’t a clear handoff or clear delineation between when requirements and planning ended and design officially started, or a target design completion date.

Snippet of engineering sprint-scheduleIn the following weeks after our discussions, engineering created a detailed sprint-level schedule on Confluence listing out all the scrum teams, team members, and the user stories for the upcoming sprints in the PI, with a clear indication of which involved UX. The visibility into engineering activities around features was valuable for UX and helped us assess the complexity of an upcoming feature. Understanding the capabilities and features that each scrum team was responsible for was extremely helpful in allowing UX to closely partner with architects and developers during design and implementation. On the UX side, we created and maintained a UX milestone tracker, which we used to provide visibility to other teams on the status of design backlog items. The UX tracker listed details around features, including the status, target delivery date, the assigned designer, notes on progress, completion date, and links to the corresponding Aha user story and Figma design. Both the sprint schedule and the UX milestone tracker became essential tools, not just for UX, but across the project, to monitor the progress of upcoming features and milestones, and were updated and maintained in subsequent years.

Ongoing touchpoints and communications

I created regular standing meetings with engineering and other key stakeholders to discuss features coming down the pipeline and ongoing design features. The touchpoints also provided a forum to bubble up issues, work on blockers, and adjust the sprint schedule. Where needed, we would break up complex features or swap features around to give UX the time for additional research or design iterations, while meeting engineering capacity and program velocity goals. To reduce “noise” and provide focus, I assigned designers to specific capability areas and released designers from attending all project meetings. I asked project managers and business analysts from the PMO to publish an agenda ahead of time when sending meeting invites so designers could discern ahead of time which requirements and planning discussions they needed to attend. Within the UX team, I held UX workshops at a cadence of two to three times a week in addition to regular one-on-ones. The UX workshops doubled as a forum for designers to bring challenges and gather peer feedback and ideas while allowing designers to learn about the areas other designers were working on to gain a better, holistic picture and ensure designs were consistent and dovetailed into each other well.

Working out kinks in the working environment

I held meetings with delivery managers and product engineering leads to discuss process improvements. I pointed out that the high-level engineering capabilities roadmap didn’t provide enough granularity for UX to be able to tell which UX features were needed when. We needed visibility, at a sprint level, a detailed breakdown of individual features, and which required UX support, as well as the points of contact for each scrum team, so we knew who to reach out to with questions. To make sure we had a clear handoff, I communicated that UX would need to know when a design was needed, between one to three sprints ahead of when a feature was slotted for development.

MVP

My team spent about the first fifteen months after I joined the program supporting the design and implementation of hundreds of features for MVP. Initial focus was on incorporating key functionality from the legacy platforms, onboarding clients into the system, and making sure all capabilities needed day one were complete. Within that timeframe, we successfully launched workstreams to support a handful of internal payer lines of business and external commercial payers.

Diving deep into claim auditing

While the program’s focus was on MVP, we hadn’t forgotten the objective to transform the claim audit process and improve efficiency. My team was continuously conducting generative and evaluative research to better understand our users.

Clinical reviewer journey mapGathering insights and finding opportunities through research

I coordinated ongoing research initiatives such as usability tests, heuristic evaluations, and user observations. We used data gathered from research to improve upon features as we integrated them into the new CAP system. During the beginning months, when we focused heavily on MVP, it was often not possible, due to the timeline and short-term technical feasibility, to incorporate the optimal solution. As such, I needed to work closely with engineering, operations, and product partners to determine “fast follow” features that we couldn’t get into MVP, and triage UX recommendations and findings from our research. Some were turned into engineering backlog items to be addressed within the following program increments, while others were incorporated into the product vision plan to be revisited in subsequent years. In parallel with production design work slotted for development, I worked with my team on future enhancements high on the UX priority list so they’d be ready to be addressed whenever the development team had extra capacity.

Organizing research findingsResearch planningOptimizing the claim audit experience

As the project evolved and MVP was complete, we were able to dedicate more effort to optimizing the claim audit experience to improve the user experience and claim efficiency. One item that my team identified and successfully advocated for was the redesign of the Claims Cost Management (CCM) decisioning process, which depended on review of analytics data to assess whether a claim should be paid. The initial version of the design was created before I joined the project under an aggressive time box. Based on data gathered in qualitative research, my team had identified multiple pain points and areas for improvement. As the program prepared to launch a new CCM workstream, there was concern that the claim audit velocity wouldn’t be able to meet workstream throughput demands. My team closely collaborated with product engineering and operations to redesign the decision-making process, the most tedious and complex part of the audit.

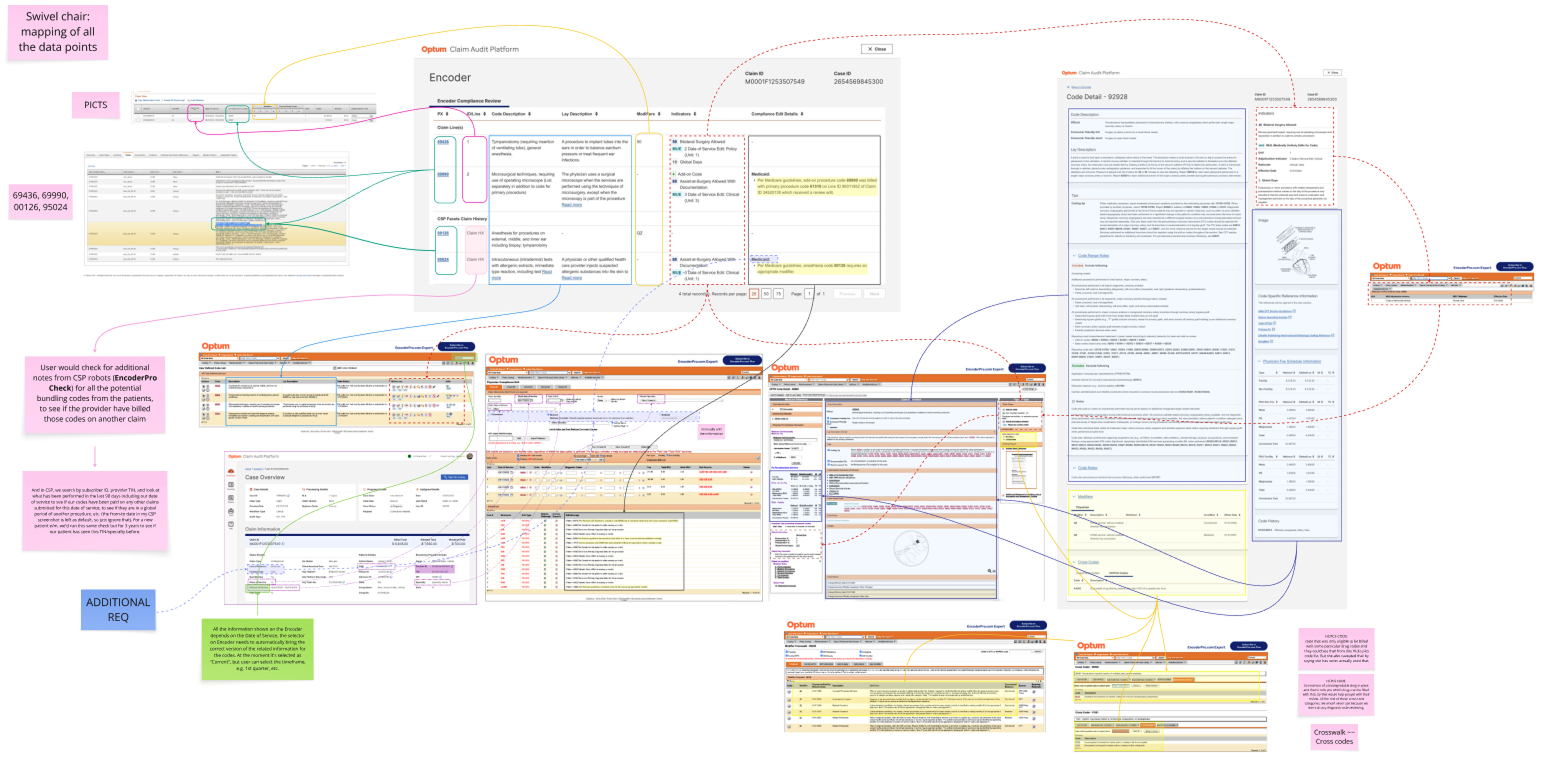

Commercial and facility claim auditing requires claim reviewers to review and validate every claim line and medical procedure it represents to decide whether a claim line should be paid. Claim/coding analysts used external coding tools to look up and validate medical service and procedure codes. The process is tedious, as it requires analysts to copy all procedure codes from the claim lines included in the claim and input them into the coding tool to search for information available for each code. My team designed the integration of information from Encoder Pro, one of the most used coding tools across multiple workstreams. We held user interviews and shadowing sessions with users to understand how they were using Encoder Pro during claim review. We organized information from Encoder Pro to be served contextually when analysts reviewed the claim lines, and integrated data from multiple Encoder Pro screens into consolidated views to streamline the process based on how we found that analysts were using the data. This feature eliminated the need for analysts to log into the Encoder Pro tool separately, manually input medical and service procedure codes, and sift through multiple views to review and consume coding information.

Integration of Encoder Pro into CAP - Code detail pageMapping data from multiple screens from the legacy Encoder Pro tool into a consolidated, streamlined viewStreamlining clinical logic populationThe most tedious part of a clinical claim review is to look through the medical record to confirm and validate the occurrence and necessity of each medical and service procedure. We designed a custom Clinical Decision Support document viewer tool with features incorporating AI/ML to extract and bookmark data from the medical record and to facilitate medical record review and automate and streamline the most time-consuming tasks within the medical record review.

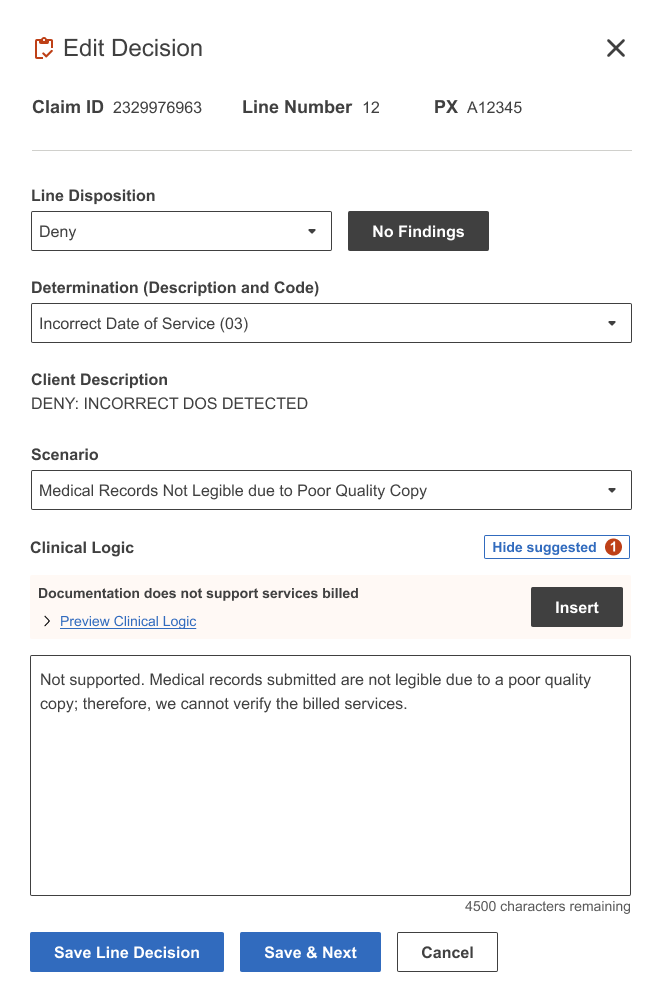

For every claim decision entered, claim reviewers must enter clinical logic text that explains their decision. In the legacy world, the operations teams managed a large Excel spreadsheet containing template text that Analysts can copy and paste from to populate the clinical logic. This involved Analysts looking through tab after tab of clinical logic scenarios for the tens of thousands of procedure codes, to find the relevant clinical logic text. We automated the process of finding clinical logic in CAP by integrating it into the claim line decisioning panel. Instead of the slow manual process of sifting through lines in a spreadsheet, the system would present the user with up to a handful of possible clinical logic choices based on the payer, procedure code, and disposition. A backend admin tool would allow operations to manage the database of clinical logic text to make sure it stays updated.

Impact

I was with the CAP project for three years before a reorg took me to a new assignment. During my time, I successfully elevated UX’s influence and visibility and improved internal relationships and ways of working. My team’s work contributed significantly to improving the claim audit efficiency and program ROI. Below are just a few of the results of our efforts:

Successfully incorporated features from legacy systems and tools and sunsetted legacy platforms, resulting in a reduced cost of ownership.

Transformed the Claims Cost management workflow, increasing CCM claim audit capacity by 17% from about 5 claims to over 6 claims an hour.

Improved the clinical audit workflow to achieve a 29% reduction in handle time.

Increased the usability of the claim audit experience, with research revealing a 10-point improvement in SUS score over legacy benchmarks.

Reduced swivel to external tools and manual steps by leveraging AI/ML, resulting in significant annualized savings. For example, work completed in Q3 2024 alone resulted in an annualized savings of $27 million per year.